Don't stop learning because of AI

You’ve probably heard this a lot: “AI will take over, developers won’t be needed anymore” along with those annoying video ads where someone claims you can use AI to build a million-dollar business and relax on a tropical beach. I’m not saying these businesses don’t exist, where people found a hack to get rich really fast with AI. But for most serious companies, this narrative is completely false, and in this article, I want to explain why.

LLM’s are not participating

The big misconception begins with how LLMs (and I’m specifically talking about LLMs here) are perceived as “human-like.” This is very understandable: you ask it a question, it responds in a way that feels empathetic or intuitive, and it seems like you’re talking to something very much like yourself. In reality, though, it’s only predicting the next word based on its training data. Don’t get me wrong: I don’t want to downplay the remarkable capabilities of LLMs. I just want to clarify how they are perceived versus what they actually are. Let’s use two visuals to illustrate the difference between how it feels and what it actually is:

It feels like:

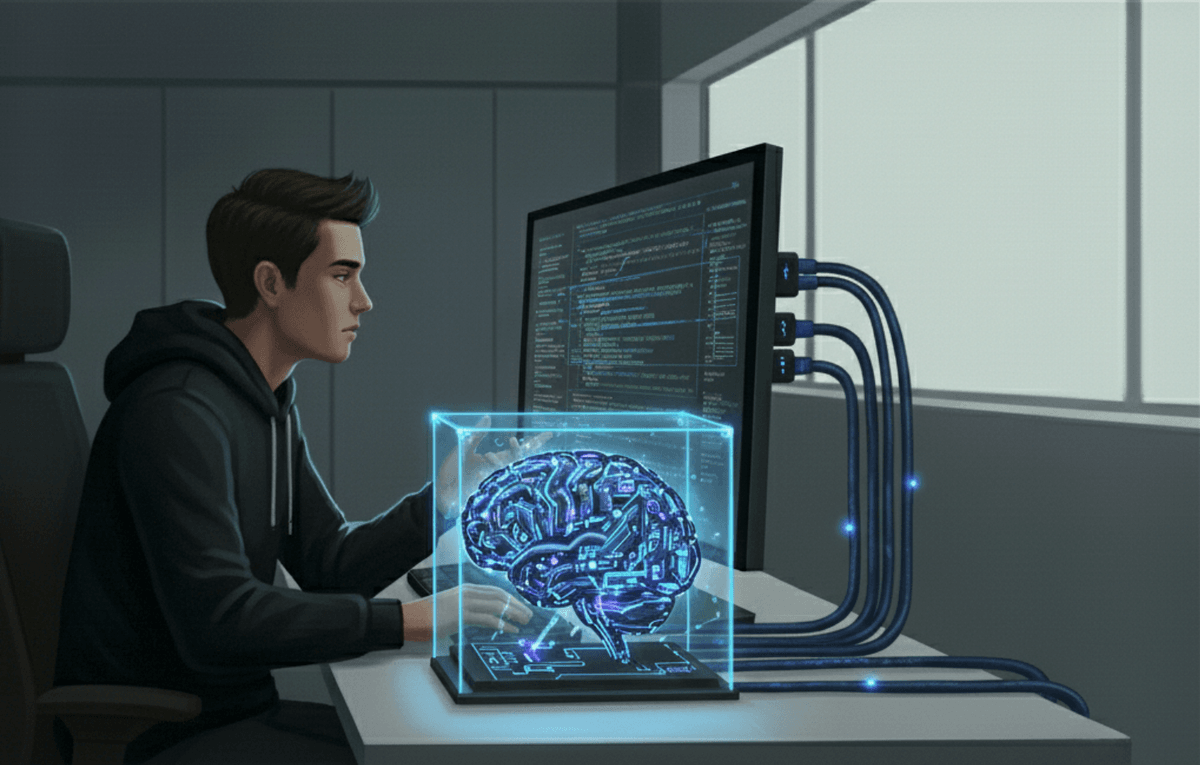

It is more like:

Of course, the LLM brain (neural network) is visualized as standing on the developer’s desk, but in reality, the LLM is either running locally on the developer’s machine or in the cloud, like with ChatGPT or similar services.

So how is this digital brain different from our human brain?

-

It doesn’t change over time

When humans think, the physiology of our brains changes over time, resulting in learning, making memories, and so on. The neural network of a LLM is not changed during inference, only the context window can be changed over time. -

It has no (physical) awareness:

As you can see, this brain has no senses connected to it. The only input it receives is the tokens we send using its context window: the glass box.

Okay, great, it’s not a human! It can still generate an app for me, so why should I learn how to code? Because being a valuable developer for a company isn’t just about writing code; it’s about understanding how the system works and being able to communicate about it. You are participating in the human world, and an LLM can never do that.

Stay happy

The second reason you should keep learning is that it makes you happier as a developer. Your happiness at work is important, not just for you, but also for your company. Stay curious! If you want to understand how a specific technical challenge can be solved, don’t simply rely on your LLM agent to fix the issue for you; try to solve it yourself. Even if it feels like the slower path, in the long run, it isn’t! This is because the knowledge you gain is valuable for the company. It is knowledge gained by a (happy) human who participates in the company.

What about AGI

I’m not an expert, and what I’m saying here is just my interpretation. If there are experts out there who can prove me wrong, I’m open to being corrected.

I believe the gap between the static LLM shown in the second image and an AI that can truly perceive and actively participate in reality is much larger than LLM companies make it seem. An LLM with fixed neural weights is fundamentally different from AGI, which would require continuous learning. This creates a scaling problem: current LLMs can serve millions of users because they don’t change, but a truly learning AGI cannot be replicated and distributed the same way. I’m not saying AGI will never happen, but to me, it feels like the idea of AGI is sometimes used by LLM companies as a way to attract more investment. I wouldn’t be surprised if this ends up being the cause of the AI bubble.

How to use AI (LLM’s)

So how should you use LLM’s? I can only give my personal opinion here: I think a good way to use LLMs is to speed up tasks that are otherwise tedious and not particularly valuable to the developer. This can be as simple as: an autocomplete, or for quickly generating a starting point for building an application, or generating unit tests and then only having to validate they are correct. The general rule should be: if you understand what the LLM is generating, you can use it to gain efficiency benefits. But as soon as you start generating code you don’t understand yourself, you’re in the danger zone: because now the knowledge about the system is not guaranteed, it is no longer in the hands of a human participating in the company.

This also means you must always review code generated by the LLM. Otherwise, the overall system architecture may become unfamiliar to you, leading to a loss of knowledge about the system, which again poses a risk to the company.

Hypothetical case

Let’s consider a hypothetical company that runs a warehouse. To keep things simple, imagine the company is made up of a CEO, a manager, a designer, and two developers. Together, they are responsible for maintaining the warehouse stock management software.

Suppose the manager hears about AI and learns that developers can be replaced by it. The decision is made to replace the second developer. The reasoning is straightforward: with the help of AI, the first developer can supposedly be more productive and deliver the same amount of code.

To a certain extent, this idea works: AI can indeed help speed up coding. However, being a developer is not only about writing code. When you look closer, the actual benefits are not so clear. For example, if the developer being replaced was responsible for managing communication with the payment provider team, that’s a part of the workload that can’t simply be automated away with AI. This responsibility now falls to the remaining developer, who will see his own productivity decreases as a result. Furthermore, if we want to ensure that a human worker maintains a thorough understanding of the application’s architecture, the first developer now has even more aspects of the system to keep track of.

It gets worse if we start replacing more human workers on the team:

Let’s suppose both developers and the designer are replaced, and the manager is now responsible for maintaining the application. The reasoning is that LLMs are so advanced at generating features that there’s no longer a need for designer or developer skills. At first, the manager is impressed by how well the LLM translates business requirements into actual working software.

However, this approach quickly runs into trouble when the payment provider releases an update that introduces a breaking change (in a bug fix release). As a result, the payment system fails. The issue isn’t that the LLM couldn’t help fix the problem; rather, the manager doesn’t understand what’s going wrong. They can see the error, but have no idea how to investigate or troubleshoot the root cause.

Ultimately, the company decides to hire an external IT professional to diagnose and resolve the issue. Unfortunately, it takes a full week of missed payments before the problem is finally fixed.

Now, let’s take it even further—imagine replacing every member of the company with LLMs.

At this point, the company becomes completely disconnected from the human world. This can go wrong in countless ways; imagine trying to do business with a company that has no genuine human involvement or understanding.

What I’m Not Saying

I’m not saying that AI isn’t disruptive in the job market, it certainly is. The way we work is changing drastically, leading to various work-related challenges. For example:

- The collapse of the junior developer market (Video about this subject)

- The issue of creativity theft with generative AI

I think it’s really important to address these issues and to ensure the human brain remains central in discussions about these topics.

The human brain is more beautiful than you think

Don’t underestimate the beauty and power of the human brain. It’s a biological masterpiece that cannot be replaced that easily. Keep learning and stay curious!

Images created with WizzyPrompt